Introduction to 3D Scanning with Sven Johnson and Parixit Davé

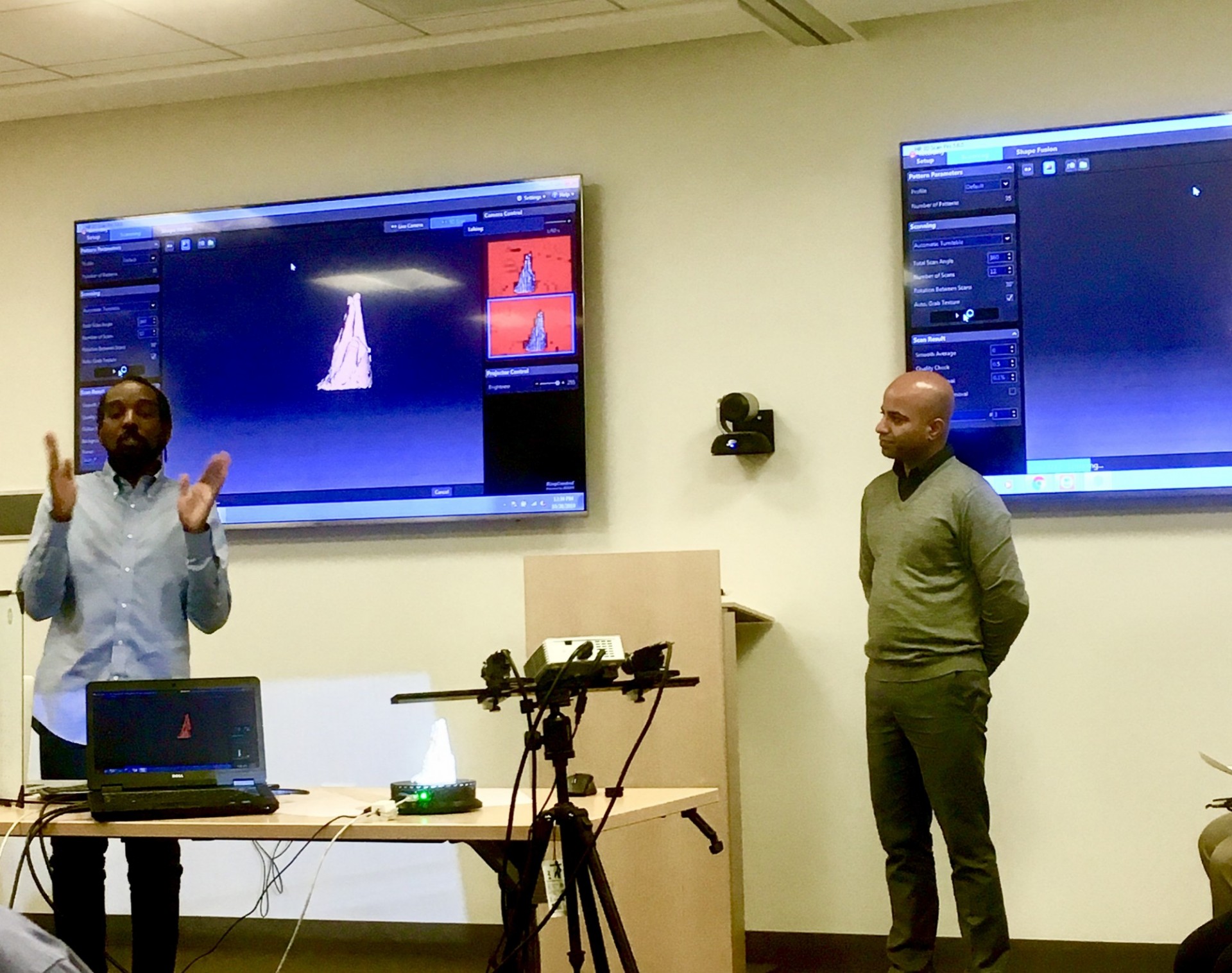

At our October 28th Lunch and Learn, we were very excited to have CUIT’s own Senior Application Systems Developer, Sven Johnson, and Emerging Technologies Director, Parixit Davé, talk about 3D scanning, explaining how it works and how to get started with your own 3D scanning.

3D scanning, as explained by Johnson and Davé, is the process of turning a physical representation of objects into a digital representation. 3D scanning is usually considered the 'input,' with the 'output' being the more well-known process of 3D printing. The scope of 3D scanning is also broad, and different types of scanning technologies can capture anything from physical objects, to photos, to complete environments (such as cities or the outdoors). The uses of 3D scanning are only limited by what can be captured on camera. Current examples of 3D scanning include:

- Motion capture, used in movies and video games

- Face ID

- Scanning ruins of cities and using other technologies to recreate what the complete version looked like. This can then be transferred to an augmented or virtual reality experience to allow people to see what the ruins used to look like in complete form

- Entire cities can be recreated through crowd-sourced photos using a technique called photogrammetry

A project that Emerging Technologies is currently sponsoring also uses 3D scanning as a key component: Barnard College Archeology Department’s Professor Severin Fowles is working with members of the Barnard IMATS team to 3D scan artifacts from the Picuris Pueblo tribe in New Mexico. These artifacts are currently being held by the Spinden Collection in the American Museum of Natural History, and the goal is to 3D scan and print these artifacts, keeping the copy at the museum and allowing the Picuris Pueblo to repatriate the originals.

The digital representation is created from the scanned object by creating a point cloud, which is a grouping of measured distance and position points that represent the surface of the scanned item. With enough of these points, they can form a mesh to create a digital representation of the scanned object.

There are two ways to create the point cloud: contact and non-contact. Contact is done by having physical probes touch the object, and is useful in that it is able to create highly accurate readings of the object, especially with curved surfaces. However, it is often not usable for delicate objects that may break from being probed.

Non-contact uses any type of radiation, light reflection, and light refraction to construct the point cloud. This type can be seen with light scanners, LIDAR (used for many self-driving car environment mapping), and other similar technologies. Many scanners will also project a pattern onto an object and use the distortion of the pattern to aid in measurement.

Johnson and Davé also demonstrated several types of 3D scanning. In addition to doing a demo with a high-end HP scanner, they also introduced several free phone apps and the extent of their scanning capabilities. Scanning apps Qlone and Scandy Pro were demonstrated to 3D scan both physical objects and human faces. Other recommended apps included Meshroom and Regard 3D.