Automating 3D Model Generation for VR with Generative Adversarial Networks

Virtual Reality is a rapidly developing technology that creates fascinating 3 dimensional immersive experiences. These experiences have become more detailed and interactive over time. Sam Birley, the lead developer at Rewind, notes that users expect everything in VR to be interactive. He says that the focus of VR development, in contrast to game development, has “shifted from macro to micro; we assume that individual scenes will be far more interactive and reactive to the player. Junk lying on the floor that would be a simple static mesh in a game becomes physics enabled, graspable, as doing otherwise would likely break presence.”

Like their expectation of interactivity, users also generally expect graphics to have high resolution in a seamless VR experience. They expect the objects present in the virtual world to have the right scale, color, shading, and texture. These 3-D objects, also known as assets, form the bedrock of any VR experience. Only after these assets have been sketched and then digitally modeled in software, can they be imported into a VR engine where their interactions are coded to create an interactive virtual world.

Currently, the assets for any VR experience need to be created by a team of specialized designers using 3-D modelling software. This is a painstaking process because every object in the virtual world needs to be created from scratch. Most modelling software has a steep learning curve, and designers that are proficient in such softwares are difficult to come by. These factors create a major bottleneck in developing VR applications, which is also mentioned in my previous article.

To ease this bottleneck, researchers have been trying to come up with ways to automate the production of 3-D models using machine learning methods. In particular, researchers have seen success in the application of a particular technique to synthesize realistic 3-D models from 2-D photos using neural networks called generative adversarial networks (GAN).

Generative Adversarial Networks are a machine learning framework where two neural networks are trained in an adversarial fashion. In other words, two networks are trained to play a ‘game’ against each other in which the first network has the objective of generating new data by looking at real world data, and the second has the objective of predicting whether the data has been generated by the first network or comes from the real world data. It has been shown that there is a unique ‘solution’ to this game in which the first network generates data in a way that the second network cannot tell whether the data is real or has been generated by the first network, that is, the second network predicts that the data is generated with probability ½. This means that after sufficient training in this adversarial setting, the first network is capable of producing new samples just like the training data1!

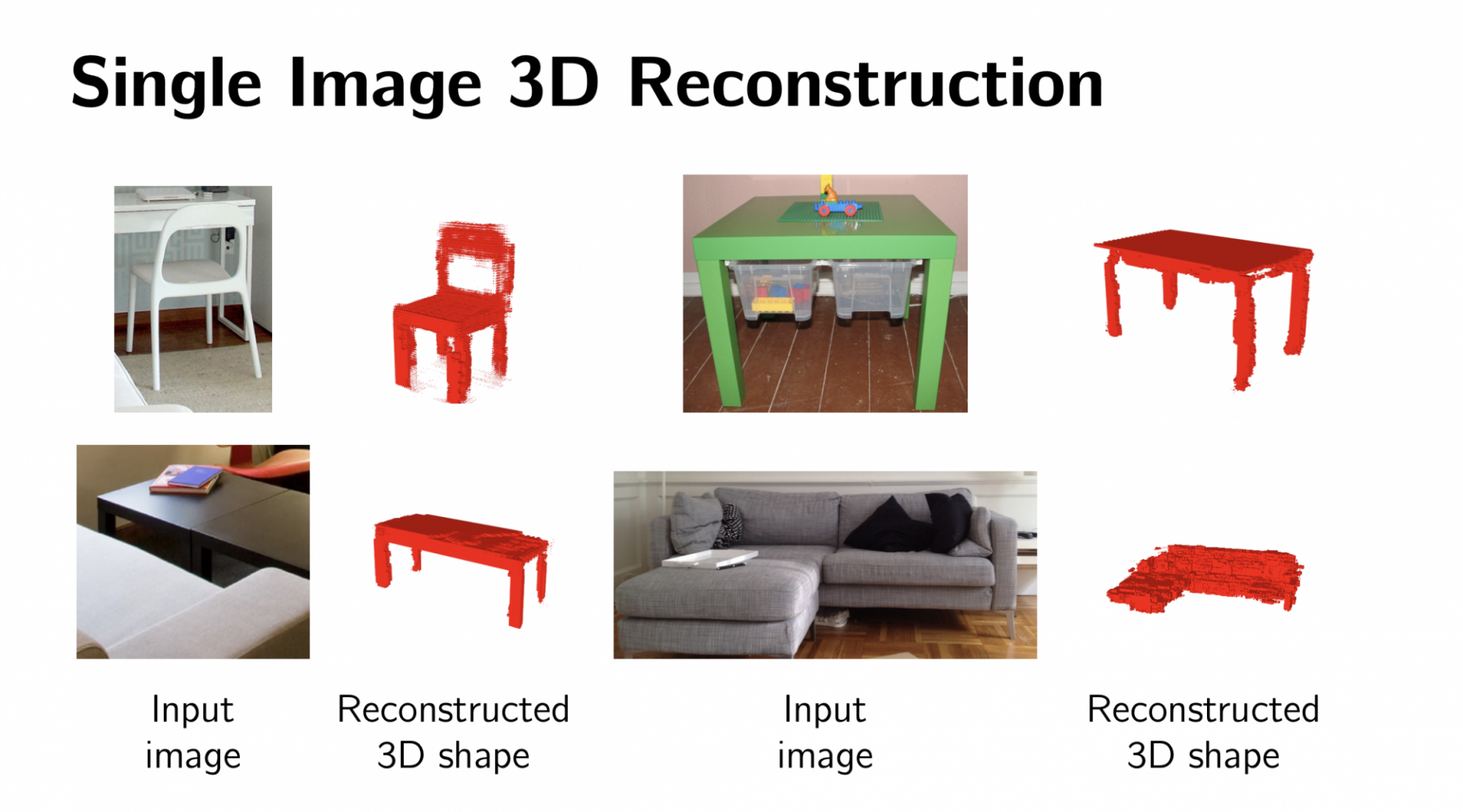

Jiajun Wu, Chengkai Zhang, and two other Researchers at MIT used this technique to develop 3D models of IKEA furniture from their 2D images2. They created a technique called 3D-GAN, which turns a “latent representation” of the 2D image into a 3D model. A latent representation in this context is a vector of numbers that represents all the pixels in the image - a type of encoding of the image that has less number of values than the number of pixels in the original image. To calculate the latent representation of the 2D image they use a variational auto-encoder, a well established machine learning technique to learn latent representations.

In the training process, 3D-GAN learnt intermediate representations of the mapping from 2D images to 3D models in an unsupervised fashion. This means the algorithm was not told the type of object it was creating, or had access to its 3D representation for generating the 3D models. Its authors note that a typical way of evaluating representations learned without supervision is to use them as features for classification. Using this evaluation technique, they got a shape classification accuracy of 91%.

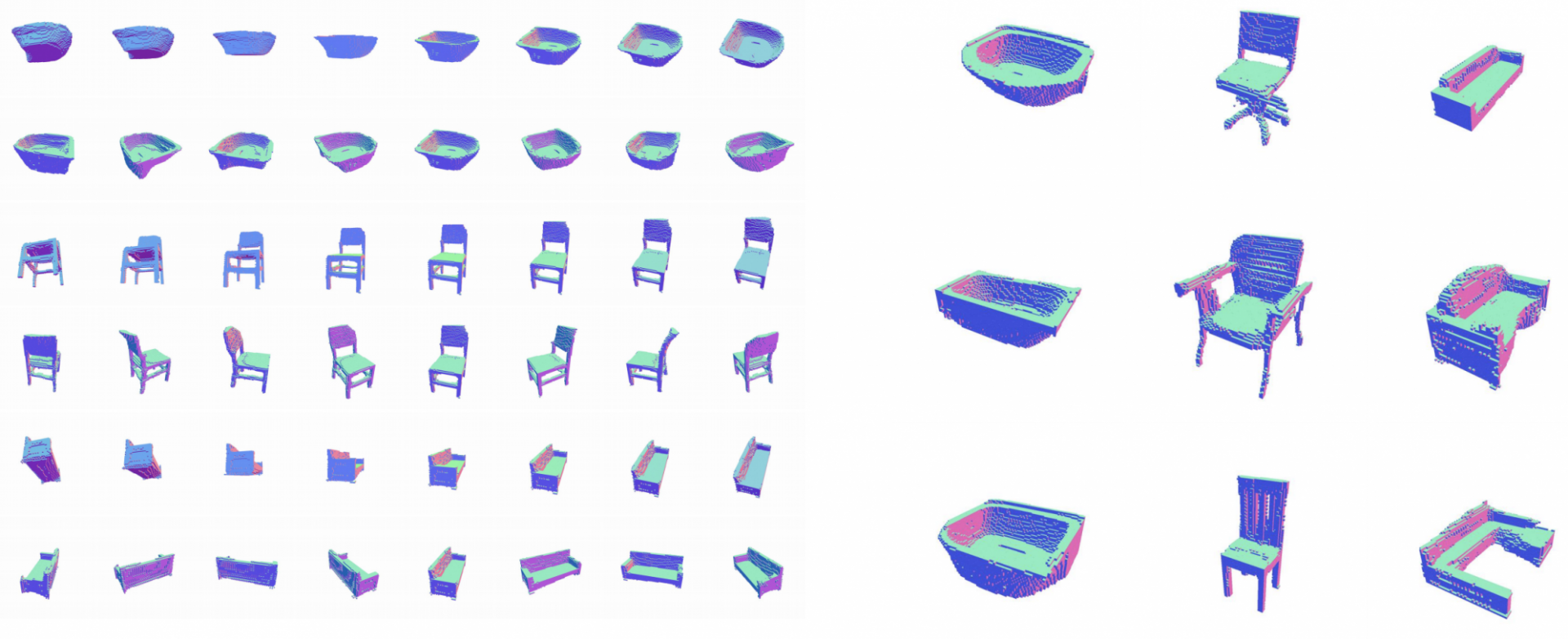

This mapping from a 2D image to 3D model, was truly a breakthrough in 3D modeling software as it can create models, albeit low resolution, from regular images that contain varying lighting conditions, camera angles, occlusions, that too with only 25 images per type of object. The technique can be used to create 3D models from a single image as well as use the learnt latent representations to transform one object into another by interpolating between the two representations!

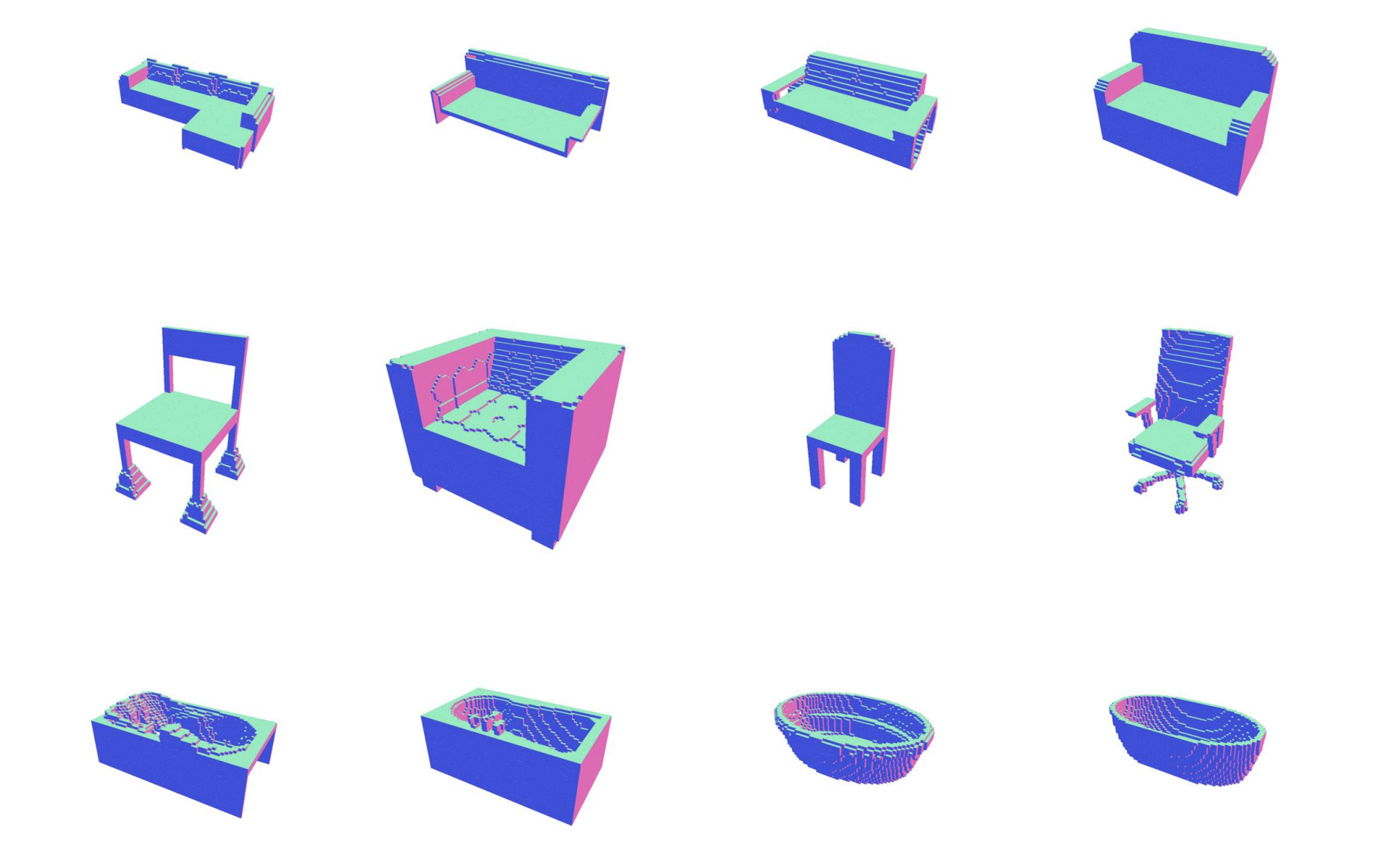

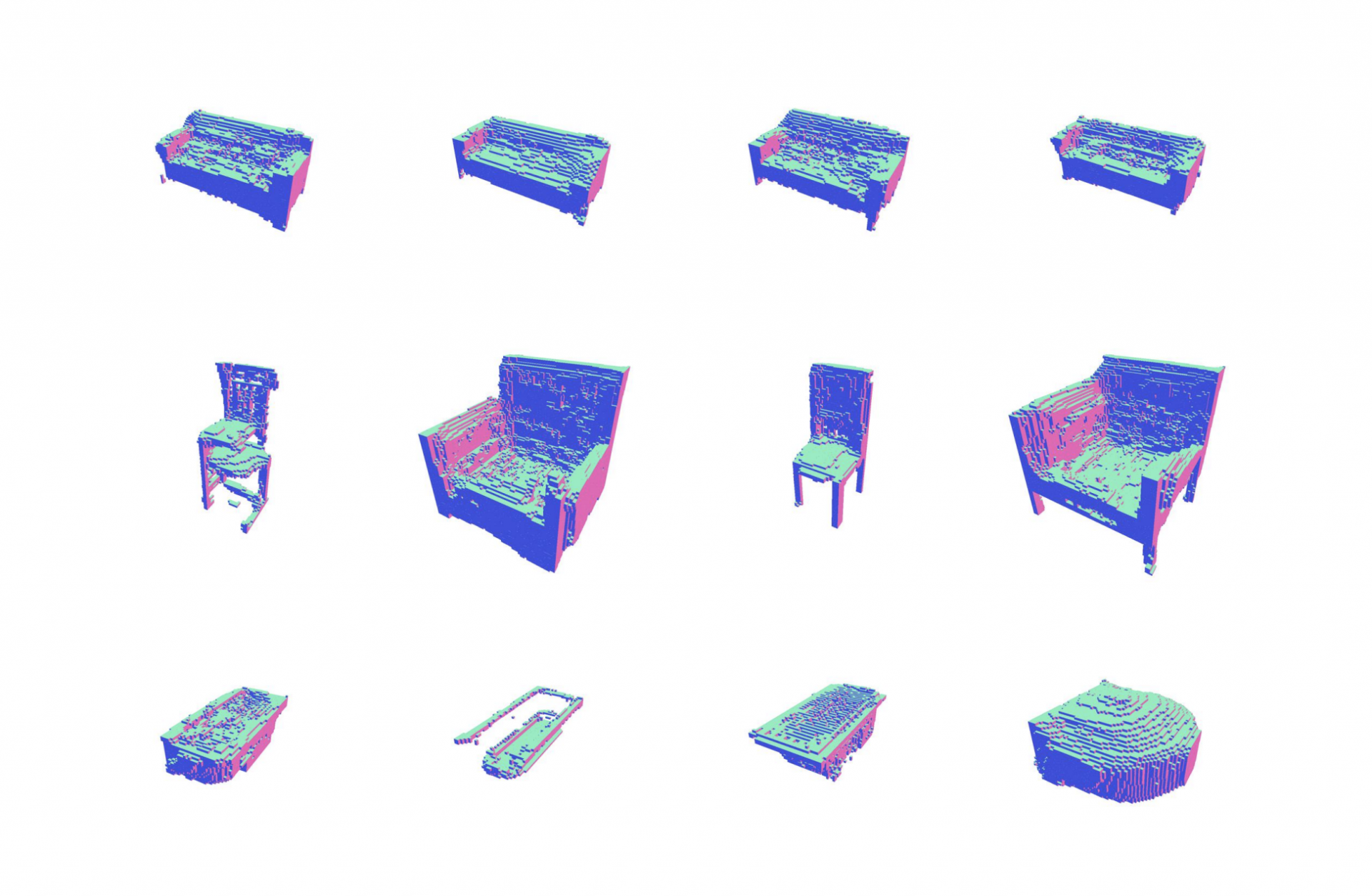

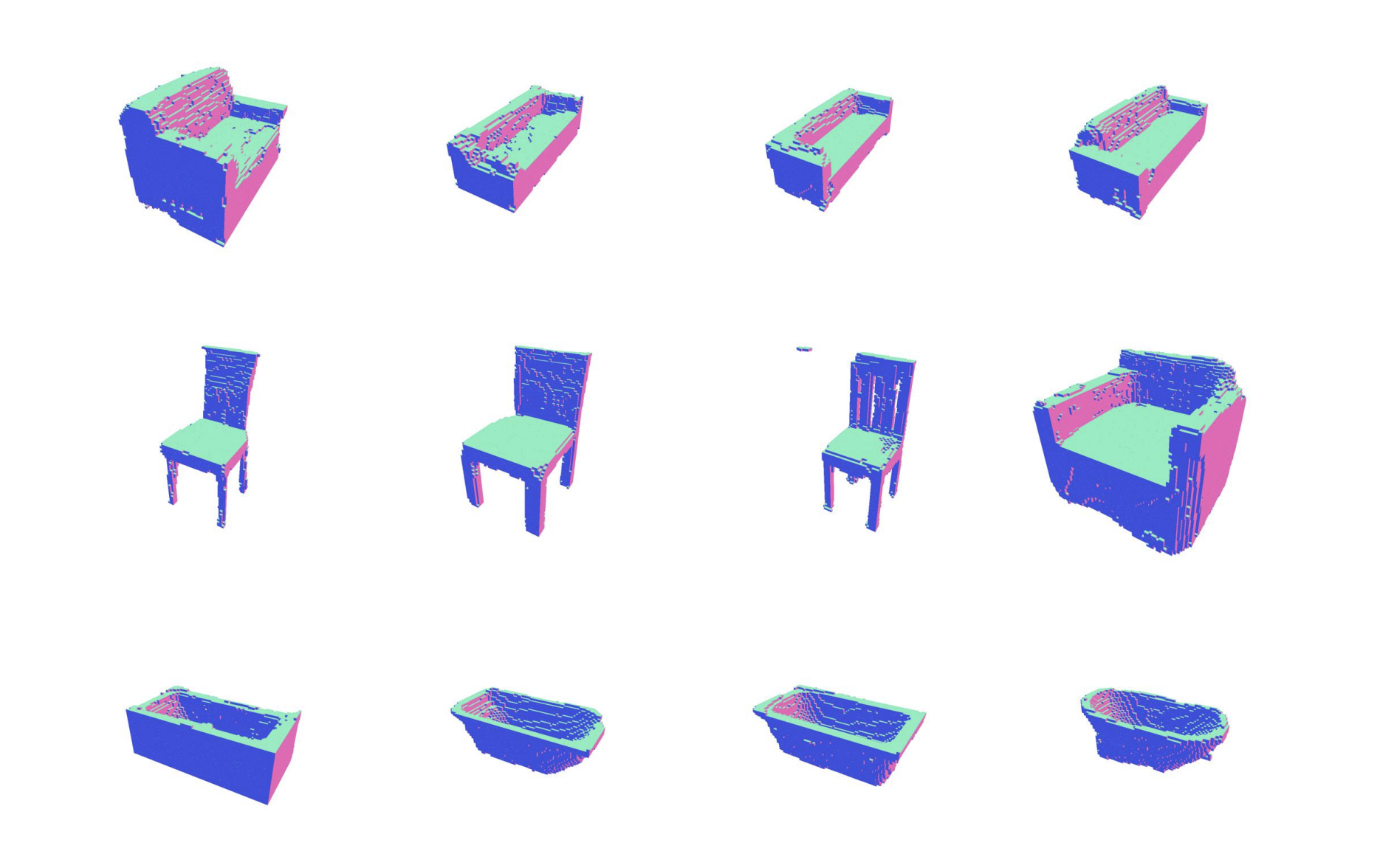

Newer techniques have built upon 3D-GAN to incorporate an automated way of evaluating the generated models. This is done by ‘rendering’ the synthesized 3D model back into a 2D image and comparing it to the input image. Doing so allows what is known as end-to-end learning. The latest such technique, called Inverse Graphics GAN3 by Sebastian Lunz, Yingzhen Li and two other researchers at Microsoft was created in 2020. It uses an off-the-shelf rendering engine in their pipeline called OpenGL. Their method is more practical than other techniques because they are able to circumvent the need to build custom rendering engines that are suitable for machine learning settings. They evaluated their model on synthetic photos rendered from 3D models as well as real photos. You can see the models this technique generates from real photos of mushrooms in the wild above. To show the improvement this technique has made, the authors share three different figures: the true 3D data, the output from a previous technique, and the output from IG GAN as seen below. Notice that their method is able to recognize concavities correctly, leading to realistic samples of bathtubs and couches.

The field of generating 3D models from 2D images is ripe with exciting developments. Although the resolution of the models generated by these techniques isn’t high enough for the process of 3D model development to be completely automated, it seems like a very plausible scenario in the future. At the very least, these systems will provide a rapid way to generate basic models for VR and gaming environments which the designers can touch up. This will drastically ease the bottleneck for VR development and increase the adoption of this emerging technology.

Viren Bajaj

Emerging Technologies Coordinator

Columbia University Information Technology

[email protected]

https://www.linkedin.com/in/viren-bajaj

1 Since neural networks can theoretically represent any arbitrary function, the first network can be trained to generate any kind of data.

2 Research page: http://3dgan.csail.mit.edu/, youtube coverage: https://www.youtube.com/watch?v=HO1LYJb818Q&ab_channel=TwoMinutePapers

3 Article: https://arxiv.org/pdf/2002.12674.pdf