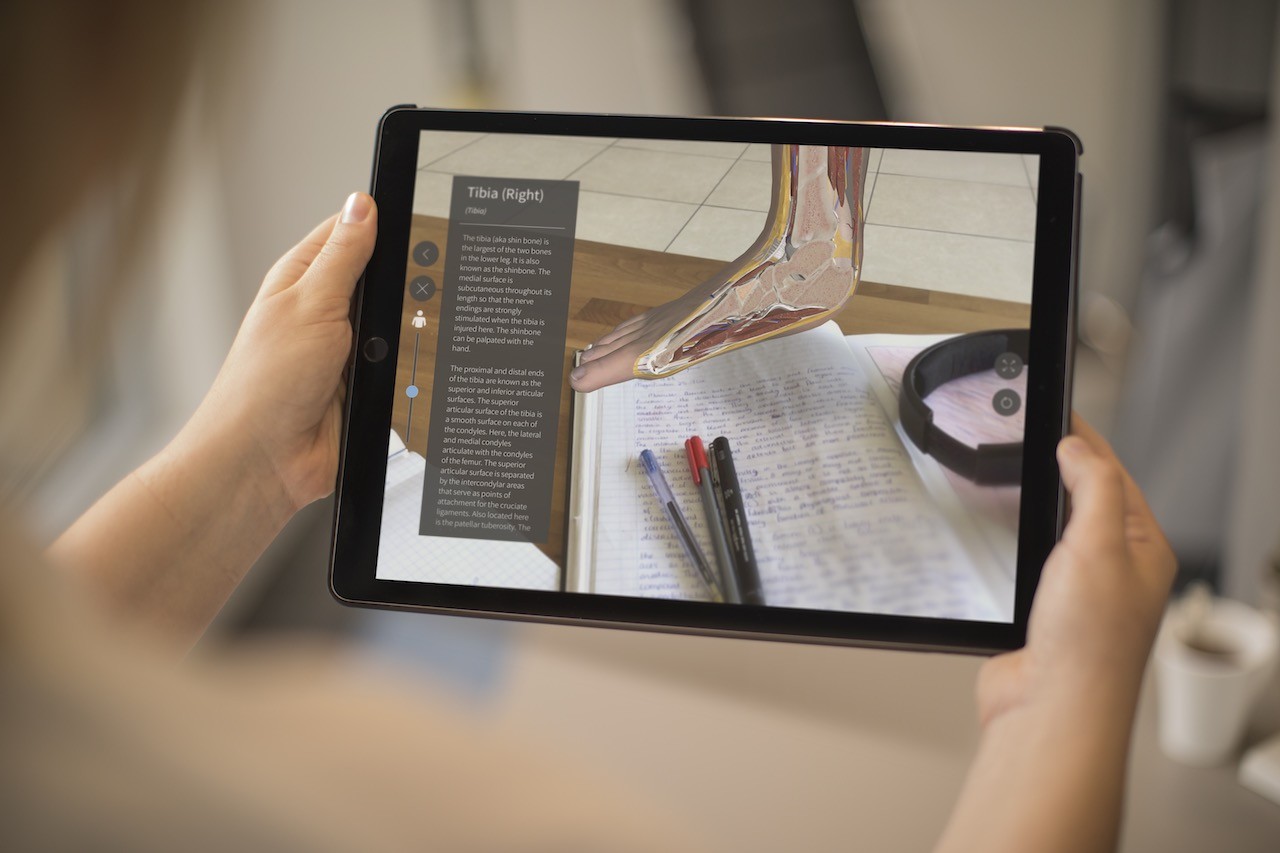

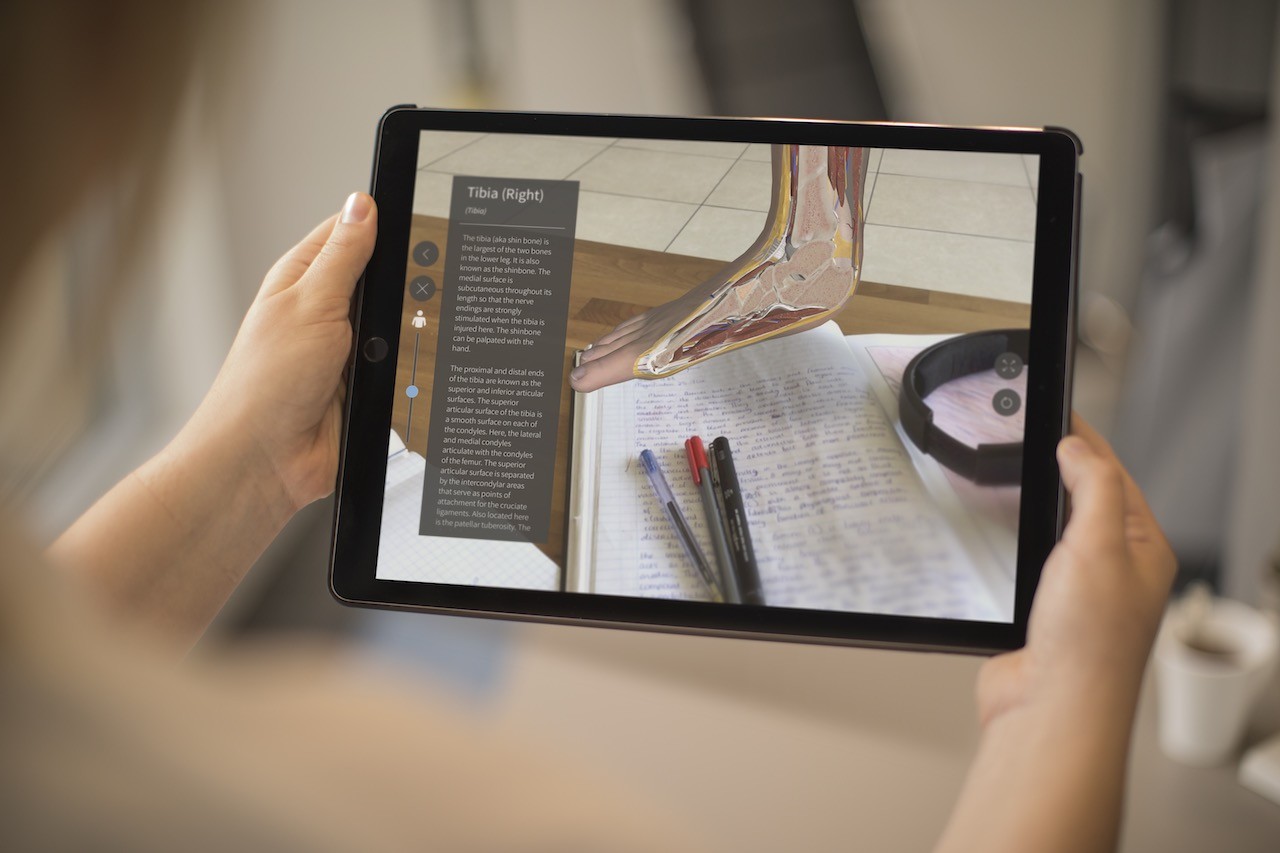

ETC hosted Steve Hayman, Senior Engineer with Apple's education team focusing on augmented reality (AR). Apple's approach to augmented reality differs from Magic Leap, Microsoft, HP, and others in that there is no head-mounted display. Your iPhone/iPad is the display and that is all you need to experience AR. While Unity and Real Engine are the mainstays in AR development, Apple's Xcode and Swift are used to build AR apps for iOS. Steve started the workshop by presenting the various apps that have been developed by people of all ages and how one of Apple's goal with iOS development is to make it accessible for anyone to develop for the platform. With over two million apps in the app store, more developers are starting to code their apps as early as age 7 and releasing them to a wide audience of users.

This workshop focused on actual development and after the introduction, it was time to code. The first app we built was a version of the "just light" app, which is simply a white screen used as a flashlight. This was an actual app when the app store was first introduced. Steve walked everyone through how to open Xcode, set up for iOS development and building out a simple user interface. He also walked through the basics of Xcode and navigating its menus and options. Once the initial code was developed for our flashlight app the next step was running it in the simulator. Xcode allows you to run your code in various iPhones/iPads models but also deploying to your actual phone.

After getting our feet with Xcode it was time to build our first AR application utilizing their framework, ARKit. ARKit combines device motion tracking, camera scene capture, advanced scene processing, and display conveniences to simplify the task of building an AR experience. You can use these technologies to create many kinds of AR experiences using either the back camera or front camera of an iOS device. The goal was to take an Apollo 11 moon launch picture that Steve had and build an app that would recognize the photo and place a video of the actual launch on top of the photo. There are built-in functions that recognize images and provide the ability to anchor media over them. With a few tweaks to the Xcode functions, we had our AR app built and were able to deploy it to the iPhone. Steve pushed us to implore other use cases and ideas on building apps and submitting them to the app store.

For those interested in Apple development please see the links below:

Exploring Xcode and Swift

https://developer.apple.com/develop/

Developer Videos from WWDC

https://developer.apple.com/videos/graphics-and-games/ar-vr

Developing with ARKit

https://developer.apple.com/arkit/