The Basics of Language Modeling with Transformers: GPT

Introduction

OpenAI's GPT is a language model based on transformers that was introduced in the paper “Improving Language Understanding using Generative Pre-Training” by Rashford, et. al. in 2018. It achieved great success in its time by pre-training the model in an unsupervised way on a large corpus, and then fine tuning the model for different downstream tasks. This technique of performing task-agnostic training followed by fine tuning was distinguished from the training task-specific models, which had previously achieved state of the art performance.

Unsupervised Pre-Training

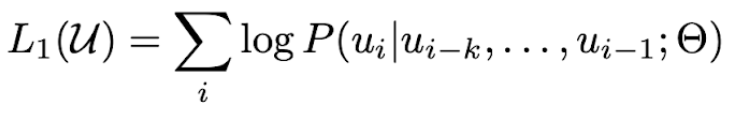

During pre-training, a neural network is used to maximize the likelihood of the next token given the previous k tokens. Concretely, given an unlabeled corpus U= {u₁, u₂, …, uₙ}, the likelihood L₁(U):

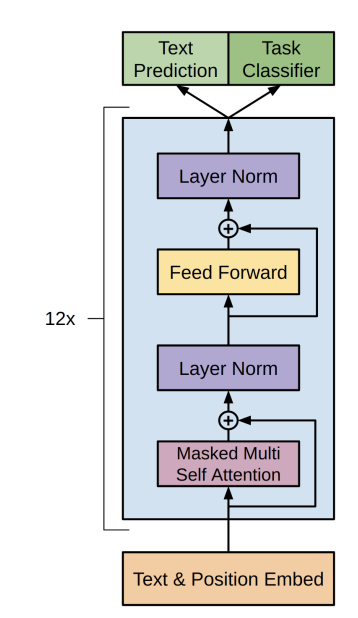

is maximized using stochastic gradient descent over the parameters ? of a transformer decoder (shown below), which models the conditional probability P.

Supervised Fine Tuning

Objective Functions

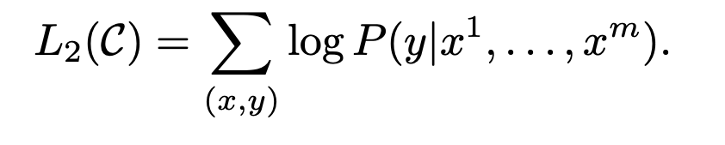

Once the transformer model has been pre-trained, a new linear (fully connected) layer is attached to the output of the transformer which is then passed through a softmax function to produce the output required for the specific task, such as Natural Language Inference, Question Answering, Document Similarity, and Classification. The model is fine tuned on each of these supervised tasks using labelled datasets. The the supervised objective function over a labelled dataset C with a data point: (x = (x₁,…,xₘ),y) then becomes L₂(C):

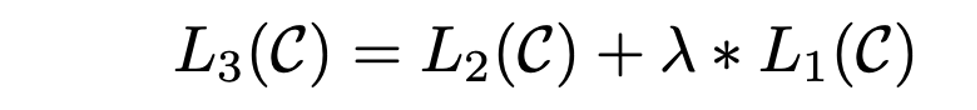

Sometimes to improve performance, language modeling objective (L₁(C)) is added to the fine-tuning objective with a regularization term to achieve the final loss L₃(C):

Input Transformations

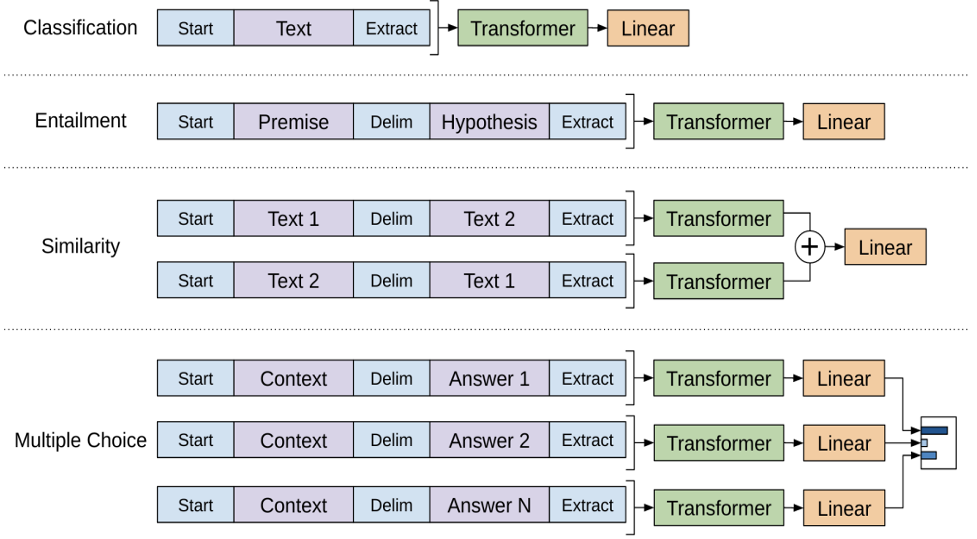

Common to all taks is the fact that the input is sandwiching the input text in between randomly initialized start and end tokens.

In Classification, the standard input transformation of using a start and end token around the input text is used.

In Natural language inference (Entailment), the premise and hypothesis are separated by the delimiter token ($).

In (Text) Similarity, two texts are separated by the delimiter token ($) and passed through the transformer once, and then a second time with the order of the texts swapped. Each output is then concatenated and passed through the linear+softmax layer. This is because there is no inherent order present in a similarity task.

In the Multiple Choice Question Answering task, the context, question, and each answer is separated with a delimiter ($) and passed through the transformer and linear layer independently. Finally, the linear output of each possible answer is passed through a softmax to get a normalized probability distribution over possible choices.

Performance of GPT

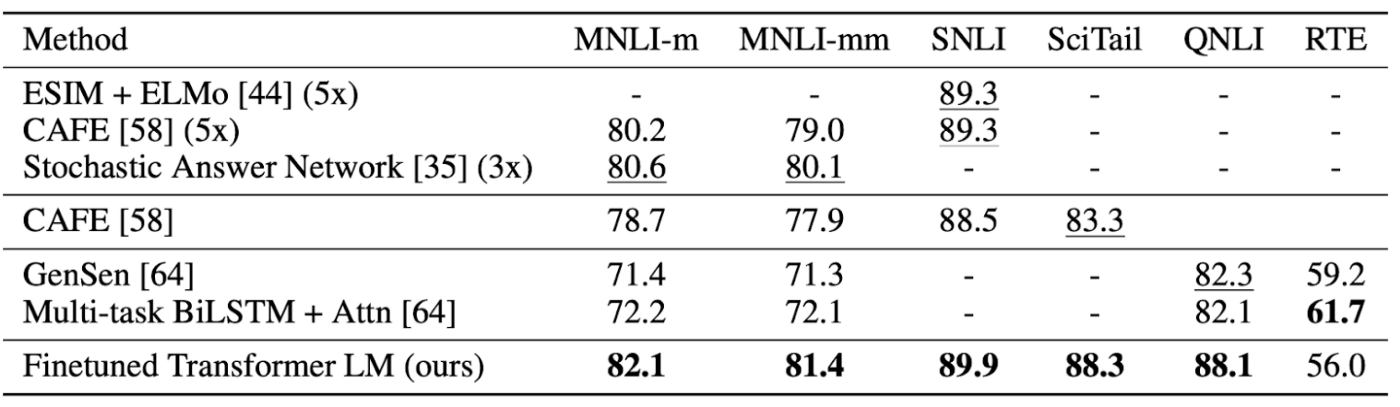

Natural Language Inference (NLI)

GPT outperformed state of the art models on all NLI datasets except Recognizing Textual Entailment (RTE).

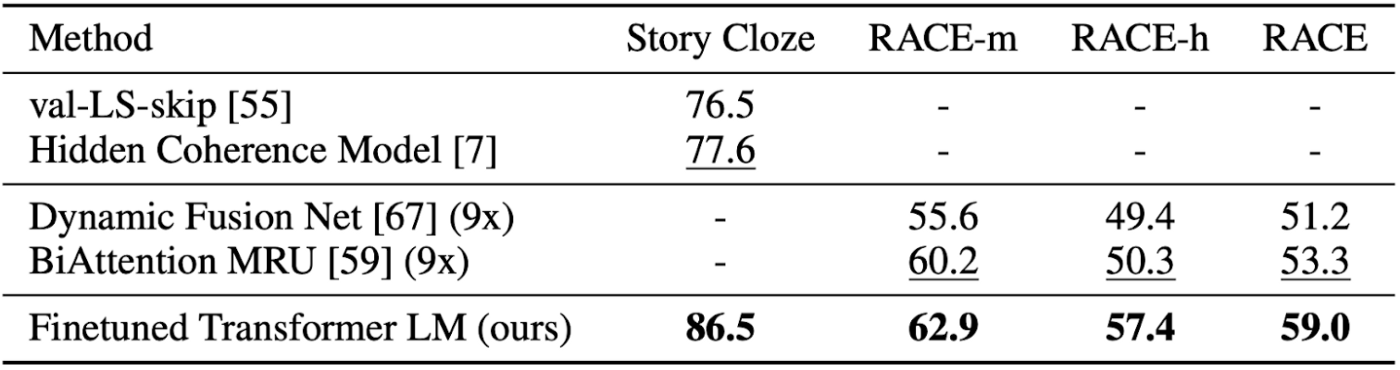

Question Answering (QA)

GPT outperformed other state of the art models on all QA datasets.

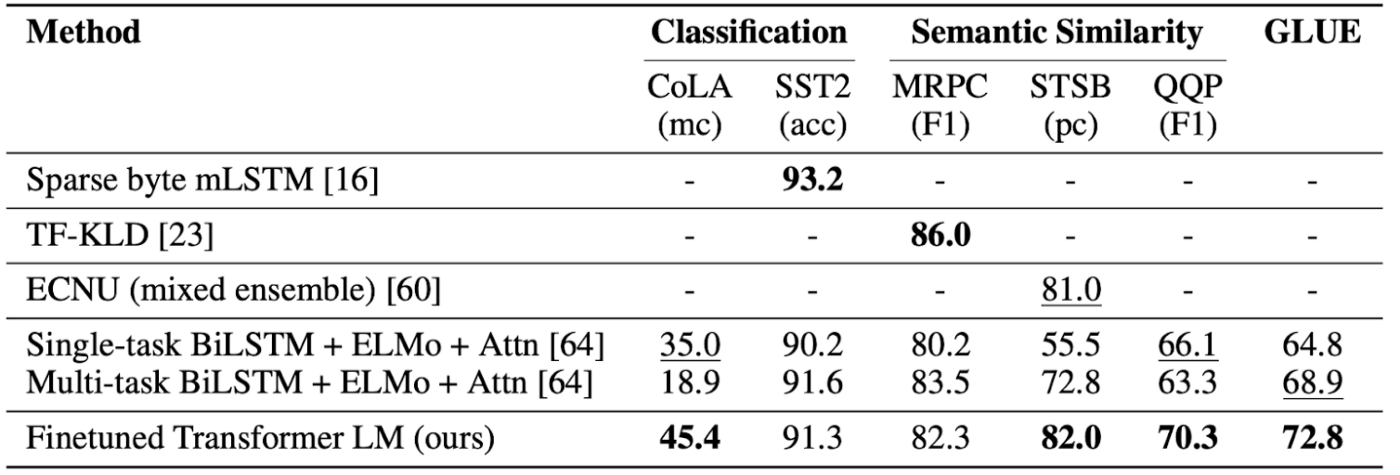

Similarity and Classification

GPT outperformed on most semantic similarity and classification datasets except the Stanford Sentiment Treebank (SST2) and Microsoft Research Paraphrase Corpus (MRPC).

Conclusion

In this article I discussed how GPT achieved state of the art results on NLI, QA, similarity and classification tasks. This model paved the way for GPT-2 and the famous GPT-3, which are just larger versions of GPT with a few tweaks.

References

Rashford, et. al. (2018) “Improving Language Understanding using Generative Pre-Training” https://cdn.openai.com/research-covers/language-unsupervised/language_understanding_paper.pdf